CONSULTING

Ignition + AI: the formula for accelerating innovation in the factory

Nicolas Chaparro Tolosa

Smart industry consultant | Linkedin

In industry, innovation is not just about doing things faster. It’s about implementing changes that are guaranteed to work. When an operating screen changes, a tag is recalculated, or an order flow is adjusted, the impact is felt beyond the IT department. It affects production, quality, safety, and operational continuity. This is why, even though artificial intelligence is revolutionizing software development, the question on the factory floor is not whether it speeds things up, but whether it does so without losing control.

At IDOM, we aimed to deliver on that promise in a real-world development environment using Ignition, a leading industrial platform for monitoring, integration, and operation. Rather than a fancy demo, we sought a responsible and measurable way of working with traceability, clear criteria, and replicable results. This document details our process, lessons learned, and, most importantly, how to leverage AI as a practical ally to deliver faster without compromising project stability.

How to make AI add value in Ignition without disrupting operations

In industry, improving productivity isn’t just about doing things faster. It’s about delivering faster without losing control. That’s where many artificial intelligence initiatives fall short. In a plant, a poorly executed change isn’t just a minor error; it can lead to downtime, rework, operational risks, or weeks of stabilization.

That is precisely why, at IDOM, we began this line of research. We wanted to answer a specific question: Can we accelerate development in Ignition without sacrificing traceability, quality, or security? AI has enormous potential, but if it is not systematically integrated into an engineering method, its applications remain limited to demonstrations that cannot survive in the real world.

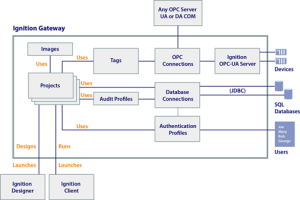

For those unfamiliar with it, Ignition is not just another application. It is a platform used in the industrial world to develop SCADA (supervisory control and data acquisition) solutions and integrate with MES (manufacturing execution system) layers. In practice, Ignition is at the heart of operations, connecting data, screens, alarms, and users. It allows for the integration of different systems and levels of industrial architecture, from plant devices to enterprise applications.

Its architecture is based on a central gateway where projects, tags, connections, and other elements coexist. Through modules such as the OPC UA Module and the MQTT Module Family (a lightweight messaging protocol widely used in the Industrial Internet of Things (IIoT)), it can communicate with industrial equipment and external systems. It acts as a bridge between the Operational Technology (OT) world (which includes equipment, sensors, and process control) and the Information Technology (IT) world (which includes applications, data, networks, and corporate systems).

Why AI is not plug-and-play in Ignition

However, this particularity is what makes using AI here not plug-and-play. Ignition contains details of which an AI tool may be unaware. Some of the scripting, or small functions that automate rules and validations within the project, is done with Jython, a variant of Python that runs on Java. Additionally, there are project components in formats that are difficult to modify, as well as native Ignition functions that don’t typically appear in public examples. Result: AI may suggest something that sounds good but doesn’t fit the project’s unique needs.

That was exactly what we experienced. In the initial iterations, Copilot suggested changes that were larger than requested, misunderstood the differences between Jython and standard Python, and incorrectly used native functions due to a lack of context. In short, applying it as it was generated errors and unexpected behavior.

The key: contextualization and specific guidelines

We learned something key there: the value lies not in writing longer instructions, but in providing the AI with the right context to work in line with the project. That’s why we created the AGENTS.md document, a contextualization guide that explains our work at Ignition, including our conventions, restrictions, API examples, folder patterns, and security criteria.

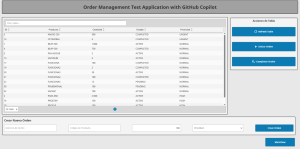

Furthermore, instead of relying on a single massive text file, we adopted a more practical approach with brief general contexts and files containing specific instructions, commonly known as prompts. We used this approach for specific tasks, such as database queries, scripting, APIs, and Perspective support (the Ignition module for building industrial web interfaces). With this solid foundation, we conducted a controlled demonstration of Ignition V8.1.38, a complete order management module that allows you to create, query, update, and delete records—typically known in software as CRUD, or the complete data life cycle.

Results: faster development, fewer adjustments, and higher quality

The result was a solid order management module that covers the entire data cycle, from creation and consultation to updating and deletion. By incorporating GitHub Copilot as an assistant and working with appropriate contextualization, we significantly shortened the development cycle. Not only is the speed relevant, but also the fact that this progress was achieved while maintaining technical control and reducing subsequent adjustments because the tool worked in line with our rules, conventions, and restrictions from the outset.

Additionally, we identified another important benefit: generating unit tests to validate core business functions. Simply put, these tests are automatic checks that confirm essential functions continue to work after each change. This results in fewer failures, higher quality, and greater confidence when releasing new versions, particularly in environments where downtime is costly.

In summary, this test yielded a clear conclusion: AI delivers better results when provided with quality context. On industrial platforms with restrictions and particularities, supervision is non-negotiable and part of the process. The key is to iterate, adjust the context, test, measure, and correct. Productivity does not come from demanding more, but rather from creating the right conditions for the tool to consistently generate value.

We are now applying this approach to real projects because its potential is clear: combining good engineering practices

with AI as a co-pilot to deliver faster without losing traceability or control.

If you are wondering whether this applies to your operations, the first step is simple. First, identify which part of the cycle takes up most of your time: backend, screens, testing, documentation, or implementation. Then, design a controlled pilot with clear rules.

Looking to the future

AI can significantly accelerate development in Ignition, but it doesn’t work by magic. It only works when given quality context and integrated into a clear, structured workflow. In addition to using GitHub Copilot as an assistant, we created a work guide that explains how we operate, what restrictions exist, and what patterns we follow. When we talk about specific instruction files, we mean short, targeted texts that guide the AI through specific tasks, such as database queries, scripting, or integration. These files avoid ambiguities and reduce the need for rework.

Using this approach, we achieved measurable results in a controlled demonstration. We built a complete module in less than a day, saving significant time compared to traditional development. We also made major improvements in quality thanks to the generation of tests to validate core functions. More valuable than the numbers are the benefits of shorter delivery cycles, less friction within the team, and a repeatable basis for scaling adoption without jeopardizing operations.

Additionally, we are closely monitoring the launch of the new MCP (Model Context Protocol) module announced by Inductive Automation. This standard allows applications to securely and structurally expose context, tools, and resources to AI models. The upcoming MCP Server will enable Ignition to communicate with AI agents to perform actions, query data, and access tools in a controlled manner. This will expand the current capabilities of AI + Ignition integration and enable more secure, contextual, and native processes within the Gateway. We are excited because it will reinforce and accelerate the very practices we are developing.